The problem

We all have done it.

We all launched an EC2 instance or created another pricey AWS resource for testing or demo purposes and forgot to delete it when we no longer needed it. Often You only notice this when the monthly invoice arrives.

That’s why monitoring all Your resources is important. However, on multiple AWS accounts, this can become tedious. Especially if You have sandbox or demo accounts, where different people regularly create new resources, it can happen fast that You lose track.

The solution

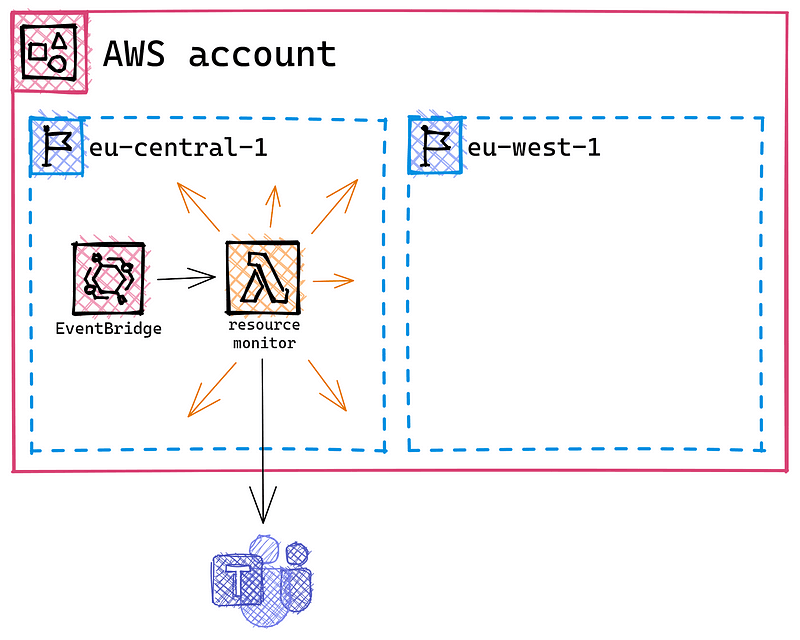

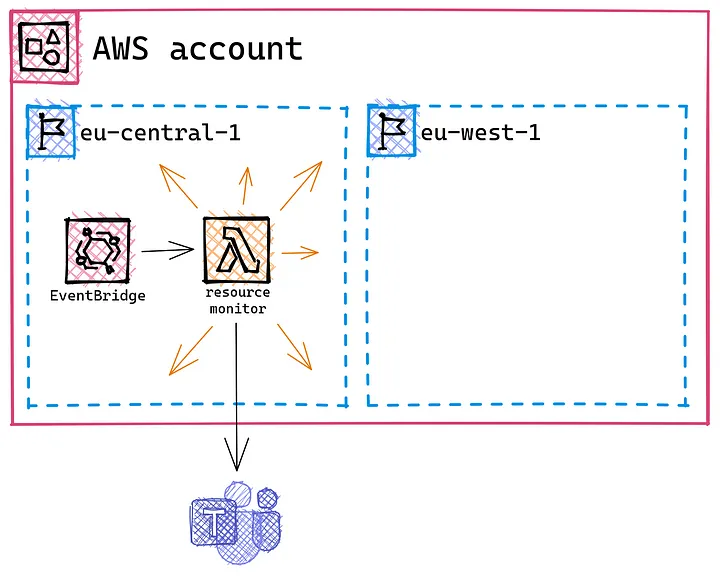

So to prevent unpleasant surprises, we will implement a system, that will keep track of Your resources for You.

The solution consists of four parts:

-

Introduce a tagging convention to record who is responsible for a resource and on which date You should delete it

-

Implement a Lambda function to gather all that information and determine which resources require attention

-

Create an EventBridge schedule to trigger Your Lambda function at regular intervals

-

Add an MS Teams channel to receive the information and display it to You and the other team members

The tagging convention

Start by introducing a tagging convention (and get Your colleagues to follow it).

To keep it simple You will only need 2–4 tags per resource.

-

<your custom prefix>:owner:namecontains the name of the person responsible for this resource -

<your custom prefix>:owner:emailcontains the email address of the person responsible for this resource -

<your custom prefix>:lifetime:restrictedcontains a boolean value and tells You whether this resource can stay for an infinite amount of time or if You have to delete it at some point -

<your custom prefix>:lifetime:endcontains an optional date value (mm/dd/yyyy) and tells You when to delete this resource

For more information on tagging refer to the AWS whitepaper Tagging Best Practices.

The Lambda function

Now that You have a tagging convention, set up a Lambda function to use those tags for automation.

Here is the code I used:

import os

import json

import logging

from dataclasses import dataclass

from datetime import datetime

from typing import Any

import requests

import boto3

logger = logging.getLogger()

if 'AWS_EXECUTION_ENV' in os.environ and 'LOG_LVL' in os.environ:

LOG_LVL = os.environ['LOG_LVL']

logger.setLevel(level=LOG_LVL)

else:

# for local debugging

logger.setLevel(level='DEBUG')

if 'TAG_PREFIX' in os.environ:

TAG_PREFIX = os.environ['TAG_PREFIX'] + ':'

else:

TAG_PREFIX = ''

@dataclass

class BotoClients:

tagging: Any = boto3.client('resourcegroupstaggingapi')

clients = BotoClients()

def init_clients():

global clients

if not clients:

clients = BotoClients()

def main(event, context):

# get all resources with restricted lifetime

resources = []

tag_filters = [

{

'Key': f'{TAG_PREFIX}lifetime:restricted',

'Values': [

'true',

]

},

]

# do

response = clients.tagging.get_resources(

TagFilters=tag_filters

)

pagination_token = response['PaginationToken']

resources = resources + response['ResourceTagMappingList']

# while

while pagination_token != '':

response = clients.tagging.get_resources(

TagFilters=tag_filters

)

pagination_token = response['PaginationToken']

resources = resources + response['ResourceTagMappingList']

logging.debug('### resources with limited lifetime')

logging.debug(resources)

# filter critical resources

critical = []

for resource in resources:

lifetime_end = datetime.now()

present = datetime.now()

for tag in resource['Tags']:

if tag['Key'] == 'power:lifetime:end':

lifetime_end = datetime.strptime(tag['Value'], '%m/%d/%Y')

if lifetime_end <= present:

critical.append(resource)

logging.debug('### resources that exceeded lifetime')

logging.debug(resources)

if len(resources) <= 0:

logger.debug('No resources that exceed lifetime')

return

# format resources

message_text = ''

for resource in critical:

message_text += resource_to_string(resource) + '\n'

# send to sns

url = os.getenv('WEBHOOK_URL')

message = {

'text': message_text

}

response = requests.post(url, json=message)

logger.debug('### teams response')

logger.debug(response.status_code)

logger.debug(response.json())

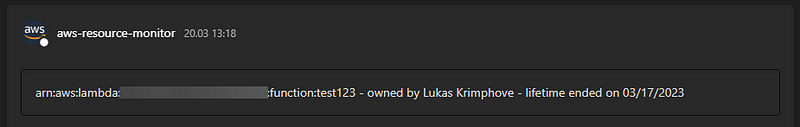

def resource_to_string(resource):

result = resource['ResourceARN']

for tag in resource['Tags']:

if tag['Key'] == f'{TAG_PREFIX}owner:name':

result = f"{result} - owned by {tag['Value']}"

if tag['Key'] == f'{TAG_PREFIX}lifetime:end':

result = f"{result} - lifetime ended on {tag['Value']}"

return result

def lambda_handler(event, context):

logger.debug('## ENVIRONMENT VARIABLES')

logger.debug(json.dumps(dict(**os.environ), indent=4))

logger.debug('## EVENT')

logger.debug(json.dumps(event, indent=4))

try:

logger.debug('## MAIN FUNCTION START')

init_clients()

main(event, context)

except Exception as e:

logger.error(f'Main function raised exception {type(e).__name__} because {e}.')

logger.exception(e)

raise e

logger.debug('## MAIN FUNCTION END')

return 'finished'

You have to change a few things in the configuration:

-

Your Lambda function will need a Lambda layer containing the Python Requests library. Check out the documentation to learn more about layers.

-

It needs permission to access the Amazon Resource Group Tagging API. You have to allow it to use the

tag:getResourcesaction on all Your accounts resources. -

You have to add an environmental variable containing Your MS Teams webhook URL (more on this later). It is called

WEBHOOK_URL. -

There is another variable You can add to support a custom prefix. It is called

TAG_PREFIX.

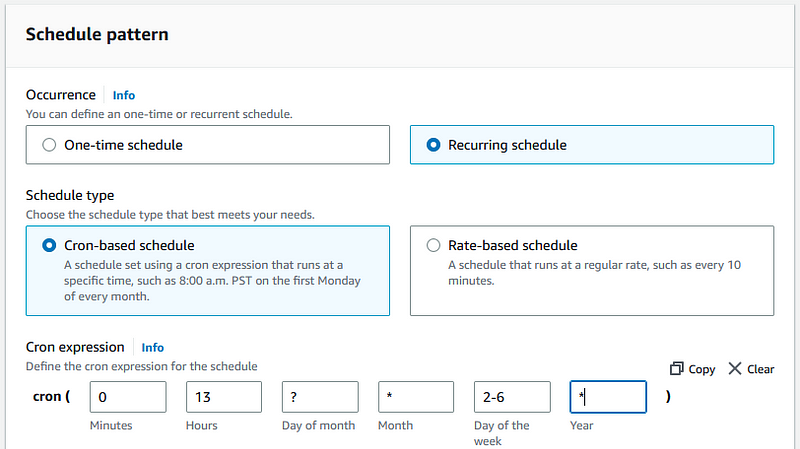

The EventBridge schedule

Go to EventBridge and create a new schedule.

You can create a recurring schedule by entering a Cron expression. A valid Cron expression is 0 13 ? * 2–6 *. This expression triggers Your function every workday at one o’clock. Next, go and select Your Lambda function as a target and create a new role for it. For the other settings, You can keep the default configuration.

The MS Teams integration

Now, go to the Teams channel You want to write the messages to. Create a new incoming webhook. Teams will then give You a URL. This is the URL You have to set as an environmental variable in the Lambda function. You can also set a fitting icon for the webhooks Teams user.

Limitation

This will only work if You and Your team members set the tags. Also, this will only show You resources in the region where You deployed the function. If You use multiple regions, You’ll also have to deploy the function in multiple regions.

If You are interested in more content on AWS You may want to check out my last Medium story about Granted:

https://krimphove.site/blog/how-to-use-granted-to-log-in-to-multiple-aws-accounts-at-the-same-time